Hi, I'm Matt.

Think. Build. Repeat.

Think. Build. Repeat.

With years of extensive experience in full stack and XR development, I have developed a deep proficiency in building robust, scalable applications and immersive experiences. My career began in backend development, transitioning to Unity development out of a passion for creating engaging interactive content. Over the years, I have successfully deployed cross-platform XR applications to various platforms including HoloLens, Magic Leap, Quest, Android, and desktop, utilizing frameworks like Photon, Cesium, and MRTK2. My technical expertise spans a wide range of technologies including .Net Core, Angular, Node.js, React, and Unity, enabling me to lead and support both proof of concept projects and large-scale deployments.

My personal game development projects are a vibrant collection of passion projects, each at various stages of completion—racing each other to see which can cross the finish line first! These endeavors allow me to unleash my creativity and technical skills, experimenting with unique game mechanics and compelling narratives. They are more than just a creative outlet; they are a testing ground for innovative ideas and new technologies. I've included these projects on my portfolio page to highlight different game mechanics, concepts, or artistic styles. Although none of these projects are fully polished, they are presented in their raw, developmental stages to demonstrate their potential and my creative process.

The hololens application gives the user the ability to scale, rotate, and place a live view of the assembly line inspection station anywhere. Cars with their appropriate paint color are spawned as the car passes through the station. As ai processed images come in they are rendered onto the car at their given coordinates in 3d space. As each inspection result is published a marker appears at the inspection’s precise location and assigned either a pass or fail. Each inspection can be clicked on to see ai results and a larger image of the inspection. Cars with more than a given number of failures are highlighted with a large exclamation mark.

A car can be dragged off of the assembly line to rotate, move, and scale for closer inspection.

The desktop application is meant to be a dashboard for those who need to monitor inspection results in real time as well as assembly line data. Inspection results can be filtered by type or vehicle. An overall pass/fail percentage is tallied to identify problems on the line or with ai models.

Monster Tag is a multiplayer tag based game that keeps players engaged and working towards goals no matter which team they find themselves on.At the start of the game one player will find themselves infected. The identity of this infected player (Monster) is not known to the other players (Humans). Players will have 30 seconds to go hide on the map or at least blend in among numerous AI Humans. Once the 30 seconds is up, the infected player will have the ability to fully turn into a Monster and begin their hunt OR they keep their identity concealed for up to 30 more seconds to do some incognito reconnaissance.

In total each game lasts 8 minutes. The goal of the Humans is to survive until sunrise, at which the Monsters lose their invulnerability and can be killed. The goal of the Monsters is to hunt down and infect all Humans before sunrise. During the game Humans can loot around the map to find items that can help them avoid capture, cure infections, and craft weapons to use to eliminate the monsters after sunrise.

Each player class has a unique ability, the common Human ability is ‘stun’, which can be used to stop Monsters in their tracks at close range and give the Human a chance to slip away. For Monsters like the Zombie and Werewolf, their ability is frenzy, which gives them varying boots in mobility to chase down a Human or quickly traverse the map. Other monsters, like the Vampire, have a shapeshifting ability which helps them go undetected for a period of time and gain a better vantage point for spotting humans.

Stand your Ground is a single player VR game that utilizes the passthrough capability of Quest headsets to emulate NVGs (Night Vision Goggles). The objective of the game is to clear your house of hostiles, 1-4 hostiles will spawn randomly in each room of your house at the start of each round.

Hostiles are sensitive to gunfire as well as movement within their fov, so move methodically and act fast in each room to avoid being detected or shot before you get the chance to take them out. Hostiles can be found standing, crouched, or prone, and will adjust their stand as needed to acquire their target.

The Game relies on previously mapped out 'spaces' via the Quest OS,which includes tagged furniture, windows, and doorways. The game supports as many rooms as you want to add. Future support will be added to allow for roaming enemies and the ability to damage doors and walls to create new vantage points.

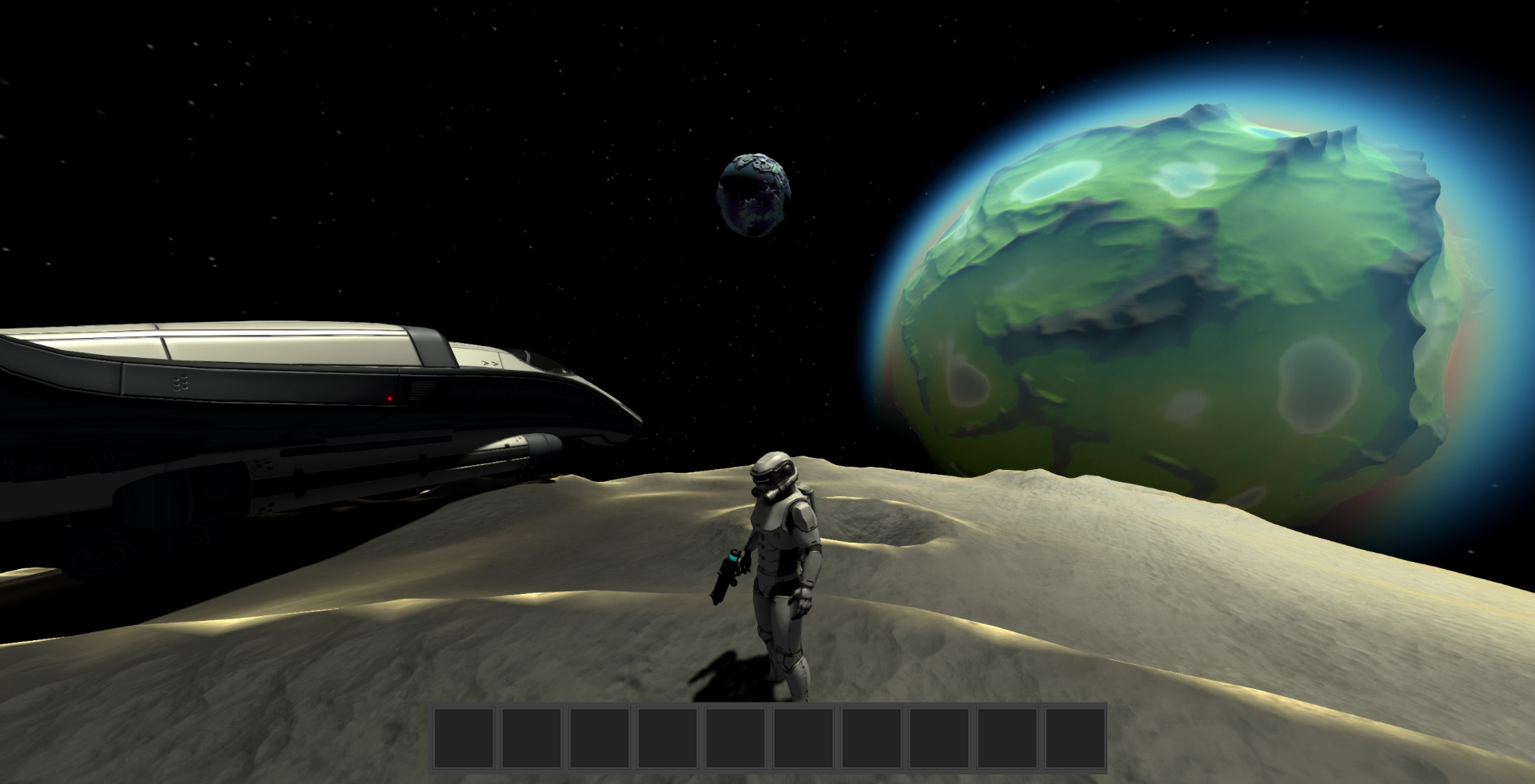

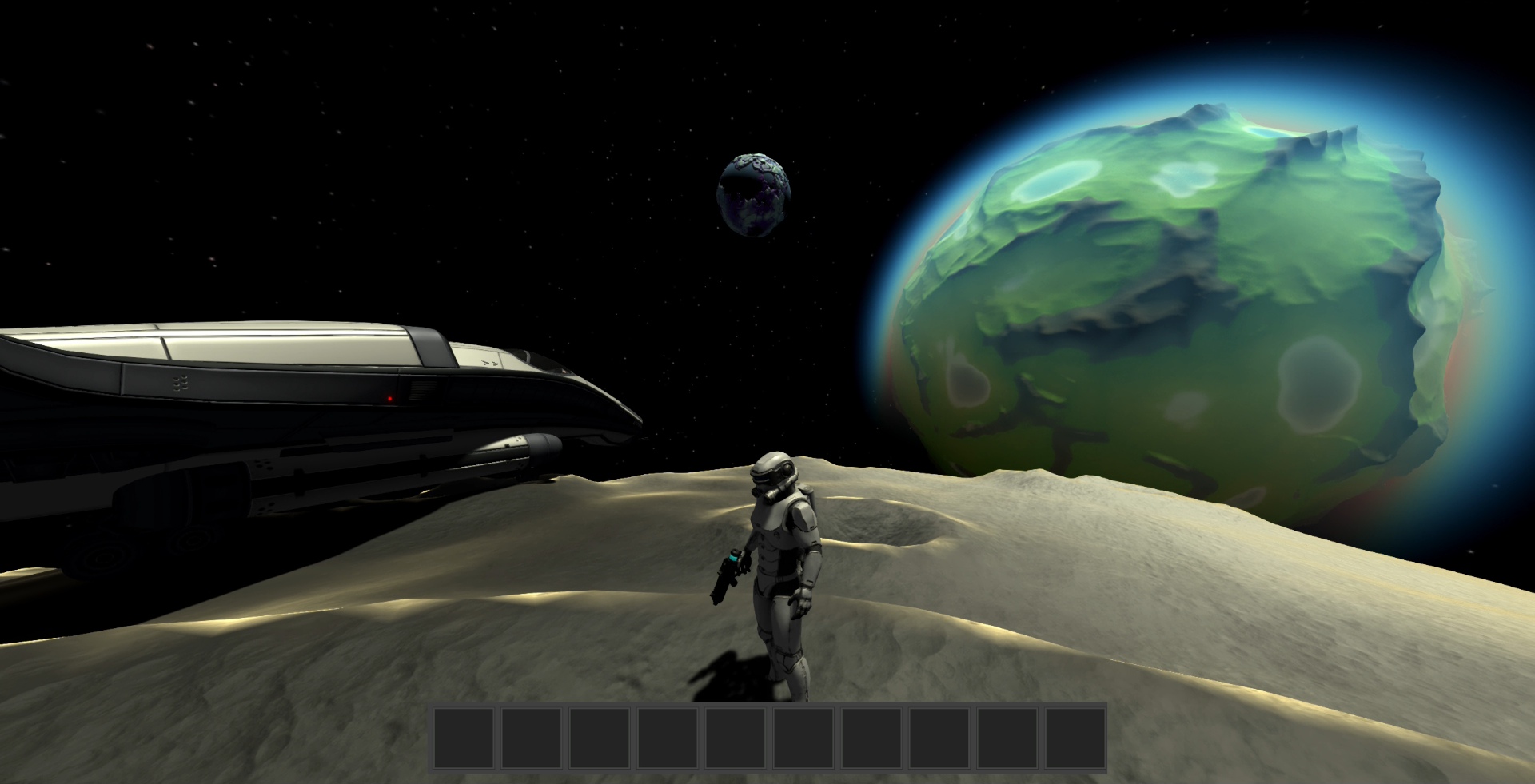

GEOS is an multiplayer space survival game set in a tiny geocentric universe.

Currently the game is just a proof-of-concept, showcasing different planetary gravitational fields, atmospheres, sizes, and rotation speeds. Only early concepts of spaceship flying, resource gathering, inventory management, structure placing, and shooting have been integrated into the game so far.

In its current demo state, players are free to host an online game of up to 6 players where they can jump between planets using their spaceships and explore the various environments hosted on the 3 different planets.

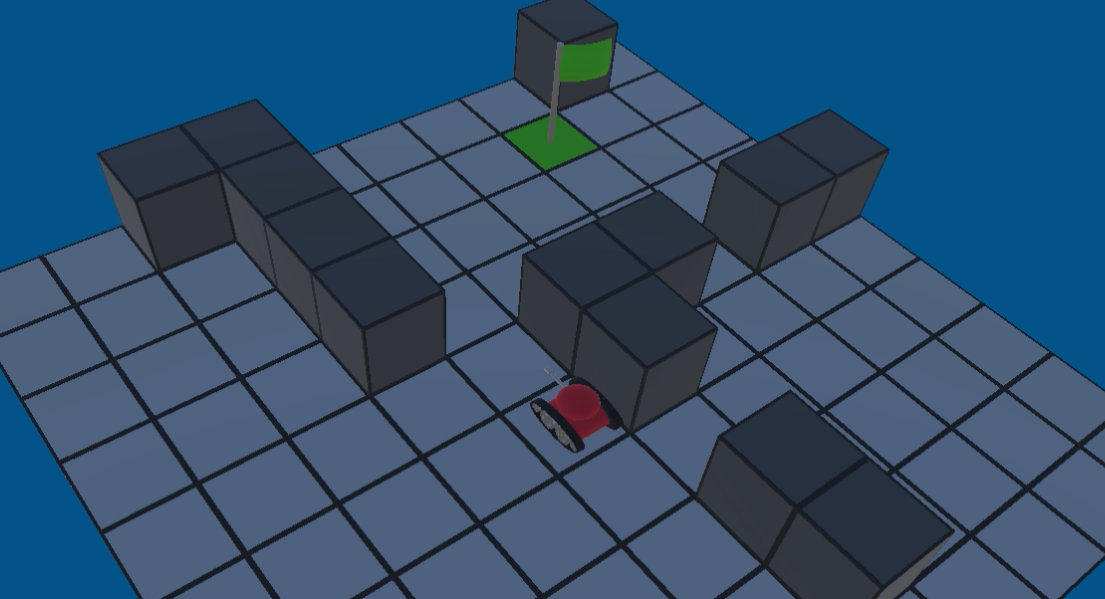

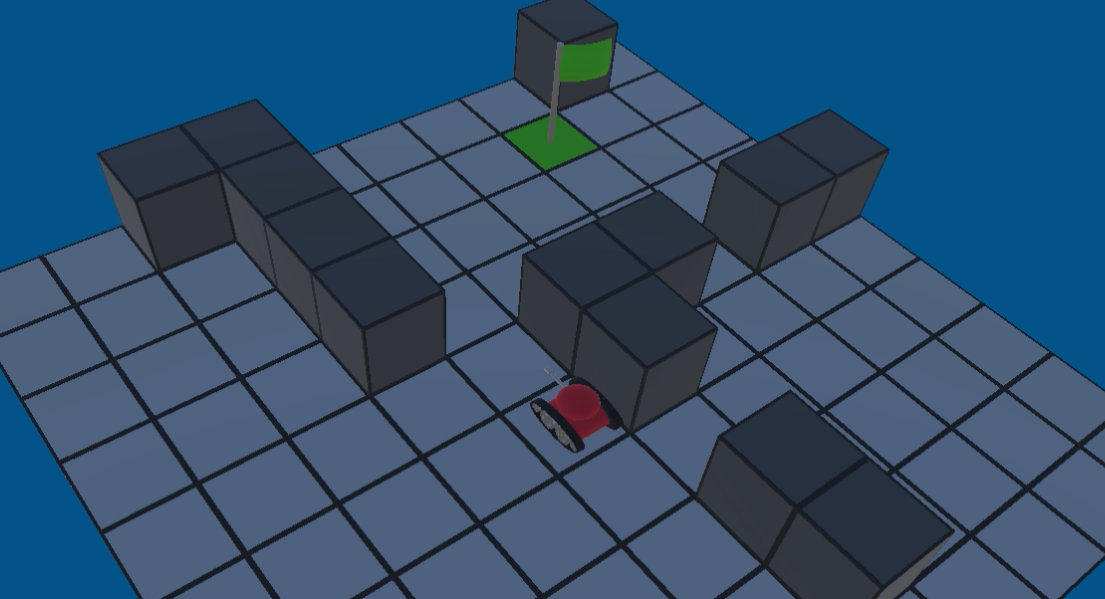

CodeBots is an early form of a drag and drop programming game, designed to teach kids basic programming concepts. This demo was used to introduce robot programming logic to 4th and 5th graders and prepare them to program lego robots to complete simple tasks.

Students are presented with a map featuring a starting tile, a goal tile, obstacle blocks, and their robot. They can drag and drop one of four directional instructions onto an instruction list on the right side of the screen. Their robots position will update on the screen as instructions are added.

Pressing play will show a 3D view of the map and will run the instructions given by the student. If the robot comes in contact with an obstacle, the simulation will stop and go back to the instruction list to allow the student to modify the instruction list to fix the problem.

If the robot makes it to the goal tile, a congratulatory message will be displayed and they will advance to the next level.

HIVE Unit was created in a 2 week Game Jam with one other developer and a 2D artist.

Students are presented with a map featuring a starting tile, a goal tile, obstacle blocks, and their robot. They can drag and drop one of four directional instructions onto an instruction list on the right side of the screen. Their robots position will update on the screen as instructions are added.

Player must call in a defend the hive buster device. Once fully activated the device will summon the queen.

The game can be played on Windows or Mac, download link and game design doc can be found at https://lostsheepstudios.itch.io/hive-unit